Robots txt file is the only file which tells crawlers which section of files or pages to crawl and it tells google which pages to crawl, how much crawl delay of web crawlers and also used to avoid overloading your website requests.

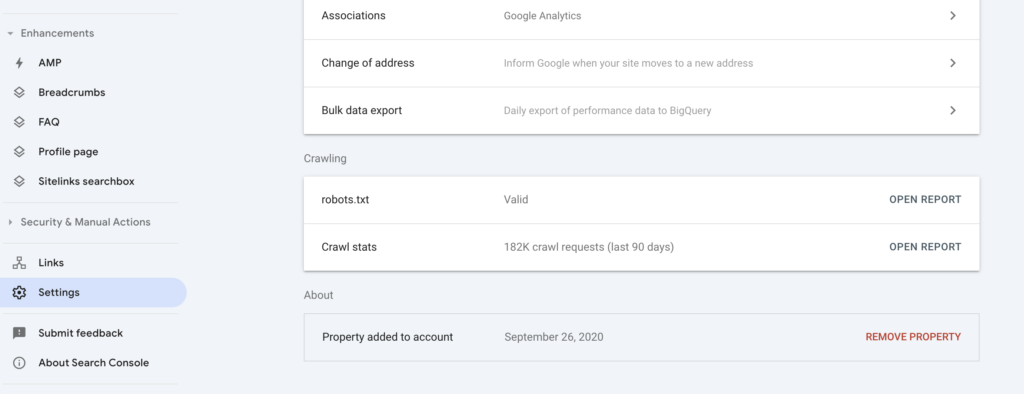

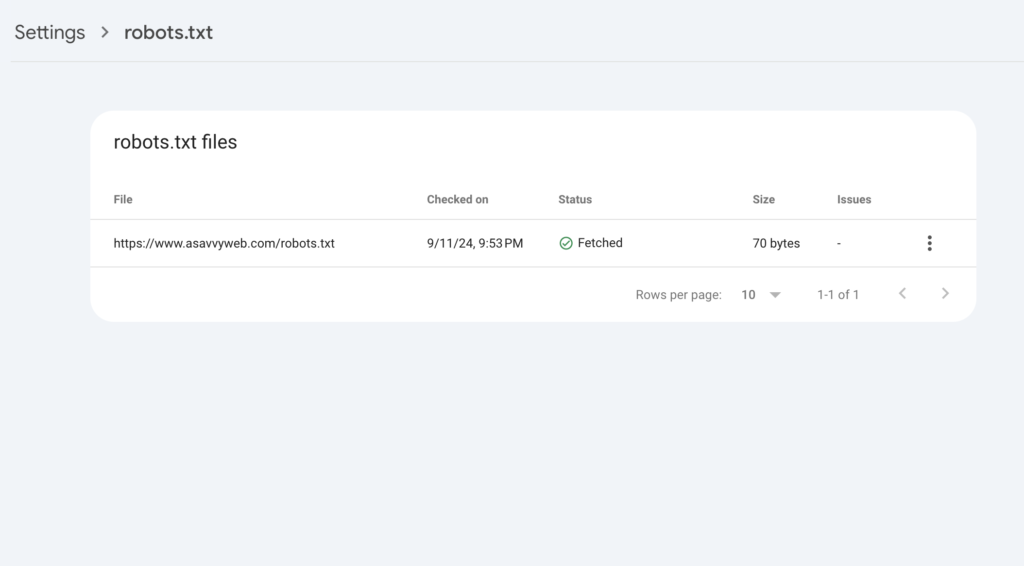

You can use search console and go to Settings -> On Next to Robots -> Click on Open Report -> Now, you will see robots txt file of your website and status of robots txt file (If it is fetched you will see fetched) if there is any error or robots file is blocked then you will see blocked or error messages here.

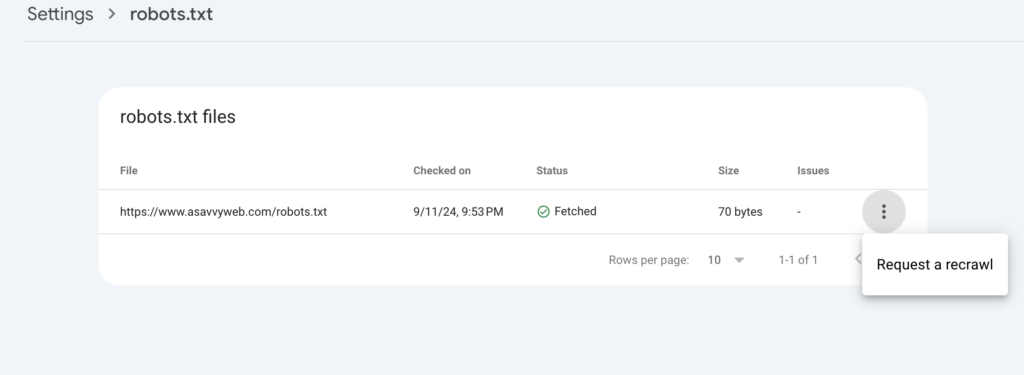

Now, Click on 3 Dots here -> Click on Request Crawl and google bot will crawl robots file and if files or parts of website like sub folders or images are blocked then it will tell you which pages are blocked.

Robotstxt file is very important file for search engine crawlers it’s a mechanism for keeping a webpage out of google. its mandatory to check robots txt file and test robotstxt file after implementing on your website and if any urls are blocked via robots txt file then can be crawled if url can be found from other resources on web.

Perform live url inspection of the url in search console and it will tell you url is blocked by robots txt or any other source like meta tags noindex, or blocked by cdn or any other issues with url.

Once you have successfully created robots txt file for your website and decided which section of webpages to be crawled by google then the next step is to test and check your robots txt file directives are exactly working as you have created or not. If any things goes wrong google will not be crawling your website which leads to disasters and not getting your website in google search indexing sometimes.

Related Robots txt Coverage:

1. How to Create Robots txt file for SEO To allow or disallow Website For Google Crawlers

2. How to Fix URLs Blocked by Robots txt File in Google Search Console

3. What is robots txt File in SEO and Uses of Robots File

4. Indexed though blocked by robots txt file issue Fix Errors

Test Robots Txt File in Search Console

Google provides a handy tool for website owners and google search console is on of the best tool provided by google to test robots txt file. Its very simple to test robots (check robots txt file) file via search console and check whether you are blocking google bot or not. Google has many search engine bots and google search console provides checking all google bots at a time lime image bot and other bots.

You can also use tools like Rich results test and drop your website url and it will also crawl your website and it will give you results that a url is blocked or not from google.

Steps to Check Robots txt File

The robots txt tester tool in search console whether your robots txt file blocks google web crawler from specific section of your website or not which will prevent website from indexing.

Steps to Follow to Test and Check Robots txt File Blocked by Google or Not

- Go to Robots tester tool section, and scroll through the robots txt code to locate the highlighted syntax warnings and logic errors. The number of syntax warnings and logic errors is shown immediately below the editor.

- Type in the URL of a page on your site in the text box at the bottom of the page.

- Select the user-agent you want to simulate in the dropdown list to the right of the text box.

- Click the TEST button to test access.

- Check to see if TEST button now reads ACCEPTED or BLOCKED to find out if the URL you entered is blocked from Google web crawlers.

- Edit the file on the page and retest as necessary. Note that changes made in the page are not saved to your site! See the next step.

- Copy your changes to your robots.txt file on your site. This tool does not make changes to the actual file on your site, it only tests against the copy hosted in the tool.

Source: https://support.google.com/webmasters/answer/6062598?hl=en